Machine Vision & Intelligence

Determining Driver Identity and Intent Using Vehicle Sensors and Context

In this project, we would like to use multi-modal data from in-vehicle sensors such as 3D and infrared sensors from Qualcomm, and contextual information, together with new data fusion, machine vision and artificial intelligence techniques, to identify accurately the identity of a driver, as well as his/her intent. While face recognition techniques have been developed using 3D and infrared sensors, the accurate identification of a driver may still be a challenge in various circumstances. Moreover, while techniques have been developed for pose and gaze estimation, as well as gesture recognition, understanding driver intent accurately is still far from reality. Hence, with the accuracy and capabilities provided by new available sensor technologies, as well as abilities to capture contextual information about both the driver inside the vehicle as well as the road conditions outside the vehicle, we propose to develop a robust system to analyze and estimate a driver’s intent, which can have significant impact on enabling intelligent assistance/guidance for the driver’s vehicle as well as other neighboring vehicles. We next briefly describe our related work, followed by the three primary aspects of our proposed research.

Related Work

We have worked on various human/scene understanding projects utilizing depth/stereo cameras including semantic/hand segmentation [1, 2], hand pose tracking [3], and gesture (finger-spelling) recognition [4]. For semantic/hand segmentation, we proposed the depth-adaptive deep neural network that accomplishes a step toward learning/extracting depth-invariant features to achieve higher robustness and accuracy [1]. To achieve this, we developed a neural network that is able to adapt the receptive field not only for each layer but also for each neuron at the spatial location. For the segmentation tasks, we also worked on a random forest framework that learns the weights, shapes, and sparsities of features for real-time semantic segmentation [2]. We proposed an unconstrained filter that is able to extract optimal features by learning weights, shapes, and sparsities. We also presented the framework that learns the flexible filters using an iterative optimization algorithm and segments input images using the learned filters. For hand pose tracking, we proposed an efficient hand tracking system which does not require high-performance GPUs [3]. We track hand articulations by minimizing the discrepancy between depth map from a sensor and computer-generated hand model. We also re-initialize hand pose at each frame using finger detection and classification. In gesture (finger-spelling) recognition work, we proposed an automatic fingerspelling recognition system using convolutional neural networks from depth maps [4].

We have also developed efficient gesture detection techniques when developing a remote physical therapy training system [5, 6]. In the training system, patient/user’s performance need to be carefully evaluated, which requires gesture detection on the patient movements. To achieve this, we proposed a gesture-based dynamic time warping algorithm to segment user gestures in real time [5]. The proposed algorithm enables real-time evaluation and guidance by comparing the user gesture with a template to decide the performance accuracy, and in the meantime segmenting user gestures in real time. For more complex movements/gestures that include multiple sub-actions/sub-gestures, we proposed a sub-action detection algorithm using Hidden Markov Model (HMM), to detect the sub-actions in a gesture [6]. The HMM-based algorithm defines a gesture with multiple sub-actions as a Markov process and uses two-phase dynamic programming to enhance the accuracy in sub-action detection.

1. In-Vehicle Data collection

We assume that we have vehicles’ information on steering, throttle, brake, and other key vehicle parameters. The camera sensors and mic will be used to understand (model) driver's gaze, facial expressions, vocal instructions, hand position and gesture. The above information will be used with vehicles’ information to develop models to determine driver activity and intention.

We propose to setup camera on the rearview mirror, looking at the driver. The camera will need stabilization to counter the bumpy road driving. It will also be positioned carefully to avoid blocking driver's view. We will recruit drivers, collect the data (RGB, depth, audio), and annotate it. The data will be used to: • Understand/determine driver’s gaze. Knowing which direction the driver is looking at, is important in determining driver’s intention and action. We can also determine critical situation where the driver might be sleepy or being distracted from driving, like texting, talking to others, interact with car function such as touch screen, radio button, etc. • Understand/determine driver’s facial expression. Facial expressions can inform us how comfortable the driver is and how much should we assist him/her in driving. Facial data annotation will be mostly on expressions. Such expressions include normal expressions like happy, panic, sleepy, and also abnormal expressions related to illness like stroke or cardiac failure. • Understand driver’s gestures. We will extend our previous research to determine gestures [4,5,6] to the scenario of a driver, using the in-vehicle data collected, as well as the driving context. We will identify a set of gestures that would be most important to determine driver intent. • Understand driver’s intention: Integrate all information such as audio, gaze, facial expression, hand gesture/location, vehicle’s information, to determine driver’s intention [7].

2. Monitoring a Driver’s Intention using Depth/Stereo Cameras and Contextual Information

Monitoring a driver’s intention is important to avoid any accidents that are caused by a sudden driver’s physical disorder and also to provide the convenience of automated adjustments. A driver’s intention can be predicted by estimating the driver’s gaze, head pose, emotion, gestures, and body movement. It is also beneficial to use contextual information like driving environments and the arrangements of controllers in the vehicle such as a door-lock button, an emergency light button, etc. For instance, combining the knowledge of which direction the driver is looking and what is at the direction, we can predict the driver’s intention more precisely. Moreover, other contextual information maybe also useful, like the driver’s destination and schedule. Hence, we would like to investigate a learning-based system that combines the driver’s gaze, head pose, emotion, gesture and body movement, with additional contextual information from both inside and outside of a vehicle, to predict a driver’s intention.

3. Collaboration with Dynamic Street Awareness Platform (DySAP)

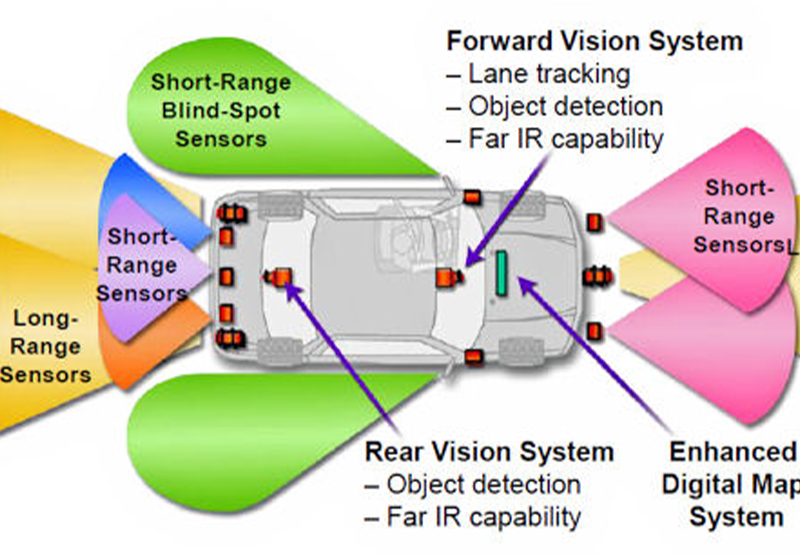

In a separate project, we have been developing a novel collaborative framework for assisted and autonomous driving, where real-time streaming sensor data from multiple sources - vehicles, pedestrians and smart street IoTs (like smart traffic lights and pedestrian crossings) - are aggregated, fused and analyzed to provide a dynamic, comprehensive and predictive awareness of streets, like the location, motion and intent of each road user. The street awareness platform DySAP will enable new services like in-vehicle AR based situational awareness, and personalized guidance and advanced warnings.

In this research, we propose to use DySAP to provide contextual information from outside the vehicle to the intent analysis system (as described in task 2 above). Based on the direction of the driver’s gaze, the platform can be used to provide contextual data regarding vehicles and pedestrians in the driver’s filed of view, which can be used by the driver intent analysis system as additional data to help determine driver intent. Moreover, the intent of each driver determined by our proposed system can be shared with DySAP; such intent information of neighboring drivers can be very valuable in improving accuracy of the predictive street awareness models, as well as provide more accurate guidance/warnings to neighboring drivers.

References [1] B. Kang, Y. Lee, and T. Nguyen, "Depth Adaptive Deep Neural Network for Semantic Segmentation," IEEE Transactions on Multimedia, 2018. [2] B. Kang, K.-H. Tan, N. Jiang, H.-S. Tai, D. Tretter, and T. Nguyen, "Hand Segmentation for Hand-Object Interaction from Depth map," IEEE Global Conference on Signal and Information Processing (GlobalSIP), 2017. [3] B. Kang, Y. Lee, and T. Nguyen, "Efficient Hand Articulations Tracking using Adaptive Hand Model and Depth map," Advances in Visual Computing, 2015. [4] B. Kang, S. Tripathi, and T. Nguyen, "Real-time Sign Language Fingerspelling Recognition using Convolutional Neural Networks from Depth map," IAPR Asian Conference on Pattern Recognition (ACPR), 2015. [5] W. Wei, Y. Lu, E. Rhoden, S. Dey, "User Performance Evaluation and Real-Time Guidance in Cloud-Based Physical Therapy Monitoring and Guidance System," Springer Multimedia Tools and Applications, November 2017, pp. 1-31. [6] W. Wei, C. McElroy, S. Dey, "Human Action Understanding and Movement Error Identification for the Treatment of Patients with Parkinson’s Disease," Proceedings of Sixth IEEE International Conference on Healthcare Informatics, New York City, June 2018, pp. 180-190. [7] I. Fedorov, B. Rao and T. Nguyen, “Multimodal Sparse Bayesian Dictionary Learning Applied to Multimodal Data Classification,” Proceeding of ICASSP 2017.