Machine Vision & Intelligence

Cooperative Driving with Highly Improved Mapping & Localization, Perception, and Path Planning

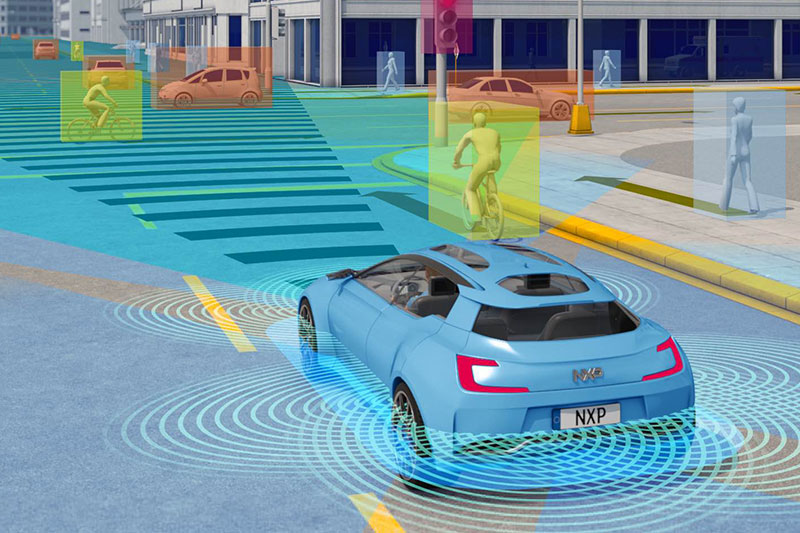

Autonomous and automated driving requires sensing of the environment based on which actions related to driving are performed. V2V wireless communications can significantly improve the vehicles’ sensing ability and solve many of these challenges (non-line of sight, long range or bad weather sensing) by combining of sensing and driving information collected from multiple cars (cooperative data). In summary, this project aims at characterizing the cooperative sensing gain via optimized information acquisition strategies enabled by wireless links between vehicles.

The specific aims of our project are: 1) How can we combine sensing data (camera, radar, Lidar) from multiple nearby cars using localization (gps) to build global sensing for a car, assuming we have data from all cars (in an offline fashion)? The challenge here is that many cars’ information, such as localization information is often uncertain and inaccurate; how do we combine information from multiple vantage point under uncertain and inaccurate information. The second question we address is 2) How critical each isolated and compressed piece of information from each nearby cars is in assisting the vehicle with the global aim of sensing and autonomy and how the wireless V2V network can be hierarchically set up to provide the critical actionable information to the vehicles. Here the challenge is to minimizing data required from other cars. The intellectual goal is an integrated theory and practice of multi-vehicle cooperative sensing via V2V communication. The tangible outcome will be new industry partners, (federal agency) grant submissions, prototype/platform developments and demos, and high visibility publications.

Leveraging on-going projects:

We have already been working on the first problem for a couple of months where we have got access to data from Toyota. Toyota ITC has provided us with multi vehicle sensing data from cars driving in different scenarios, e.g. highway and urban crowded roads. The data streams consists of a ublox gps, go-pro camera video and mobile eye camera (which provides depth), the data is synchronized for the two cars using a flash after a periodic interval. Note, despite some publicly available labeled autonomous driving data-set (such as Cityscapes, Synthia, KITTI), there are no such public data-sets available for cooperative sensing.